I worked on a GenAI product designed for large business advertisers, tackling everything from system prompt engineering and synthetic data creation to evaluations and shaping the model’s voice and tone.

I’ll discuss three major challenges faced in improving the model, the solutions, and the impact of my contributions.

My Roles

· Conversational AI design

· System prompt design

· Personality engineering

· Product design

· Content design

Team

- · Conversation Designer

- · Content Designers

- · Product Designers

- · UX Researchers

- · Product Managers

- · Engineering

- · Legal

Years

- 2024-2025

Contact

email@domain.com

000-000-000

Defining the AI's personality

The AI assistant lacked a unified communication style. Its responses fluctuated between excessively verbose and robotic, depending on the input. This inconsistency weakened user trust and made the assistant feel unreliable, especially in a professional context where precision, tone, and efficiency directly influence user perception.

The goal was to define a consistent personality framework that would guide every aspect of the model’s language and behavior, aligning it with its purpose as a professional advertising assistant.

Process

To build this framework, I conducted a comparative review of professional assistant personas across multiple AI products. The focus was on identifying the traits that projected intelligence and reliability without being cold or distant.

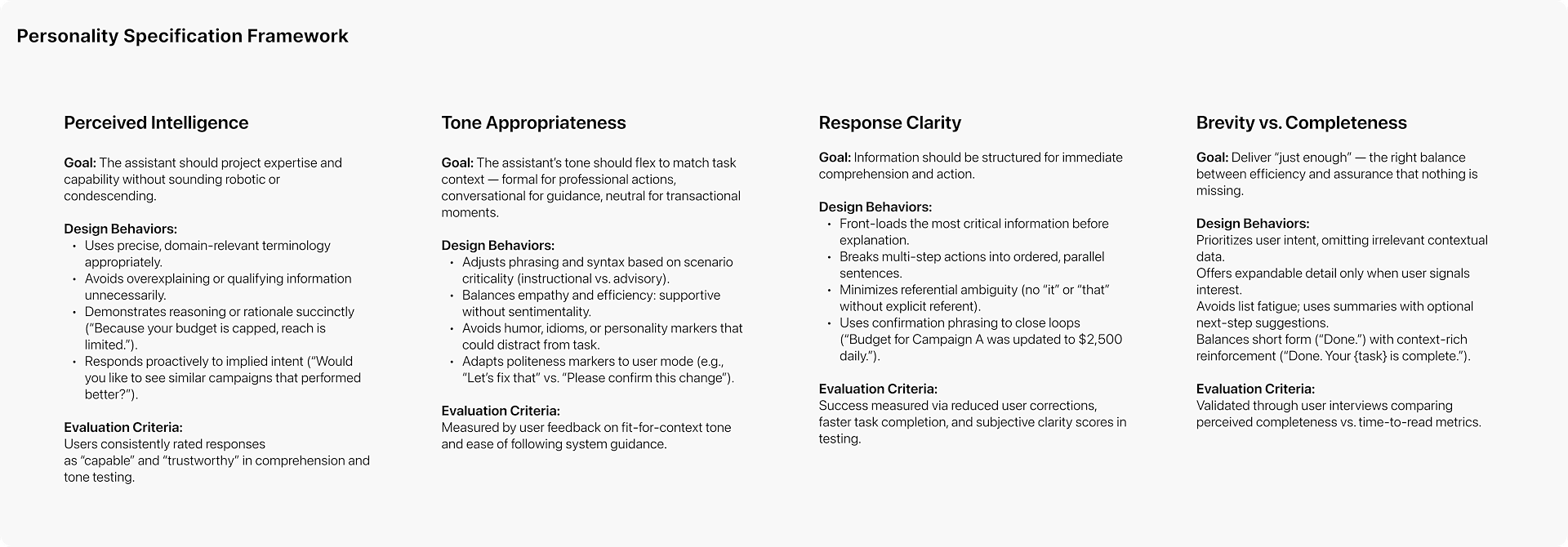

Through this research, I identified four core traits that would anchor the assistant’s communication style:

- Courteous: respectful and polite without unnecessary formality.

- Semi-friendly: approachable but not conversational or emotive.

- Competent: confident in knowledge, avoids hedging or filler language.

- Concise: delivers only what’s needed, no redundancy or narrative filler.

These traits became the foundation for golden path conversations – sample dialogs representing ideal model behavior. I wrote and refined these with two other content designers, testing variations in tone, sentence length, and formality through UX research sessions with real users.

The testing focused on four key measures:

- Perceived intelligence (did the assistant sound capable?)

- Tone appropriateness (did the style feel right for the task?)

- Response clarity (was it easy to follow and act on?)

- Brevity vs. completeness (did users feel they got “just enough” information?)

The data from these sessions directly informed prompt and model tuning decisions, ensuring that tone, length, and word choices consistently matched user expectations for a professional assistant.

The final output was a personality specification embedded in the system prompt and supported by content guidelines used across all response types. Every model response was required to reflect these traits, and golden path examples were used as benchmarks for QA and fine-tuning.

This created a shared language standard across design, product, and engineering which would reduce subjective interpretation during iteration and evaluation.

Challenges

Challenge #1:

Response

issues

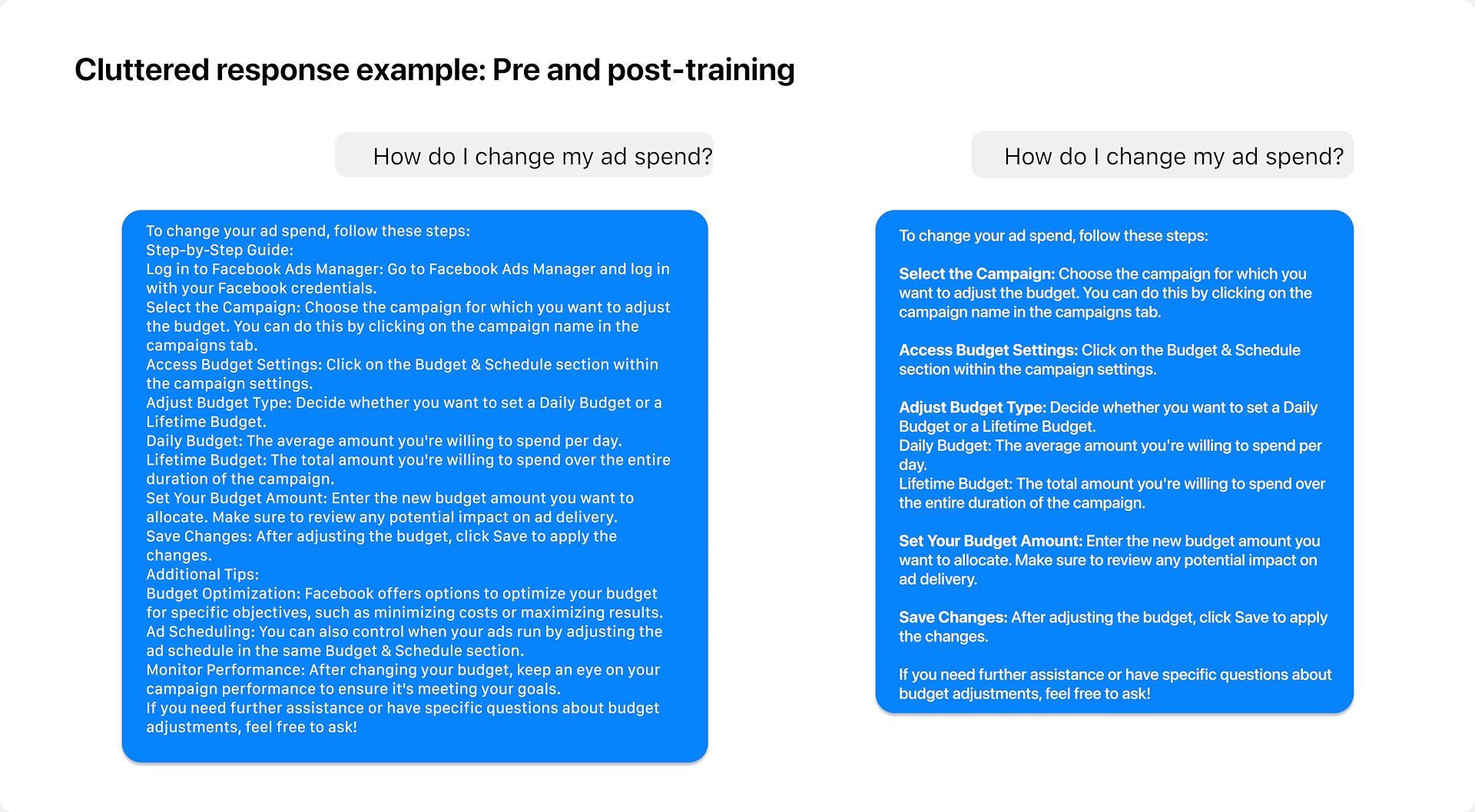

The model’s responses were verbose, cluttered, and overly formal, often using language that sounded intelligent but failed to communicate efficiently. Phrases like “please consider adjusting the required parameters to achieve the desired outcome” made simple tasks feel dense and bureaucratic.

This over-formality created a poor user experience where users had to parse long, complex sentences to extract basic information. The communication style projected effort instead of expertise. It slowed workflows, caused cognitive fatigue, and made the assistant feel mechanical rather than capable.

The challenge was clear: How do we make the AI sound intelligent but not academic, professional but human-readable?

Process

Once the assistant’s personality traits (courteous, semi-friendly, competent, concise) were defined, I led a focused effort with two content designers to rebuild the assistant’s communication model.

We began by writing golden path conversations – ideal interactions that demonstrated how the assistant should communicate across a variety of real user scenarios. These examples helped us capture the right balance of tone, precision, and brevity before writing prompts.

To validate the language direction, we partnered with UX research to test responses with real representative users to determine:

- - How clear and direct the assistant’s responses felt.

- - Whether the tone aligned with user expectations for the tool.

- - What level of detail provided value without creating overload.

We tested variations across tone, sentence length, and structural complexity, collecting both quantitative ratings and qualitative feedback.

Solution

Insights from testing directly informed the refinement of system prompts and training data.

- Prompts were rewritten to encourage active voice, remove redundancy, and avoid filler language.

- The model was guided to front-load key information, keeping secondary context brief and optional.

- Example-based learning from golden paths reinforced ideal phrasing and cadence.

The result was a communication framework that produced responses that were concise, confident, and context-aware, mirroring how a skilled human professional would respond under time pressure.

Impact

Impact

- Reduced response length without loss of meaning, improving information retrieval time.

- Improved readability and comprehension, especially for users multitasking or skimming content.

- Higher satisfaction scores in UXR studies, with users describing responses as “clear,” “efficient,” and “to the point.”

- Decreased revision cycles for content and prompt tuning, since tone and structure were now standardized.

In essence, the assistant moved from sounding like a document to sounding like a colleague who knows exactly what you need.

Challenge #2:

Inaccuracy

Accuracy was mission-critical. Unlike a casual assistant or entertainment chatbot, this product existed to help users make real advertising decisions – where incorrect data or misleading information could directly impact business outcomes, financial results, and user trust.

Early tests revealed significant issues: the model sometimes fabricated metrics, misstated campaign details, or overconfidently presented guesses as facts. These failures weren’t just usability concerns, they were also credibility risks.

The design challenge was to create a system where accuracy was measurable, improvable, and sustainable, without slowing iteration or adding unnecessary manual overhead.

Process

Our approach combined automation, human feedback, and prompt design to systematically drive accuracy improvements.

- Defining What “Accurate” Meant

- I, along with my fellow content designers, worked with product and engineering partners to clearly define what qualified as an accurate response for this domain. This included identifying acceptable tolerances for contextual responses (e.g., estimates, summaries, or advice) and separating factual accuracy from stylistic preference.

- Labeling and Benchmarking

- We began by reviewing and labeling large batches of model responses, categorizing them by factual correctness, clarity, and confidence level. These human-labeled examples became our initial truth set and was the baseline data used for model tuning and evaluation.

- LLM-as-a-Judge Implementation

- To scale evaluation, I collaborated with an engineer to design a system prompt for a “judge model.” This secondary LLM was tasked with identifying factual claims in the primary model’s output and rating their accuracy against source data.

- We generated synthetic datasets containing both accurate and deliberately inaccurate responses.

- The judge used structured evaluation criteria to assign each response an accuracy confidence score, flagging uncertain or unverifiable statements.

- These outputs informed retraining and fine-tuning cycles for the assistant model.

- Human Rater Guidelines

- In parallel, I authored rater guidelines for the human feedback phase. These guidelines defined clear, unambiguous evaluation criteria and examples for each accuracy tier (e.g., “completely accurate,” “partially accurate,” “inaccurate”).

- The clarity of these instructions was critical, since external raters would be performing the evaluations. I ran guerrilla tests with internal content designers, engineers, and PMs to ensure the guide was intuitive and free of interpretation gaps before deploying it to external raters.

Solution

By merging automated judgment (LLM-as-a-judge) with structured human evaluation, we built a feedback loop that continuously identified and corrected factual weaknesses in the model.

- The system prompts established logical constraints and reinforced accurate phrasing patterns.

- The synthetic datasets improved detection of subtle inaccuracies and overconfident tone.

- The rater guidelines ensured consistent human evaluation standards across multiple testers.

This ecosystem became our accuracy evaluation framework – a repeatable process that could scale across new product areas and model versions.

Impact

- Marked reduction in factual errors and unsupported claims in model outputs.

- Improved reliability in user-facing responses, strengthening user confidence and adoption.

- Faster iteration cycles, as automated judging surfaced problem areas early without waiting for manual review.

- Cross-functional alignment between content design, product, and engineering, grounded in a shared understanding of what “accurate” meant in practice.

Ultimately, this work ensured the assistant could be trusted as a professional-grade AI partner – one that users could rely on for decision-making, not just conversation.

Challenge #3:

Hallucinations

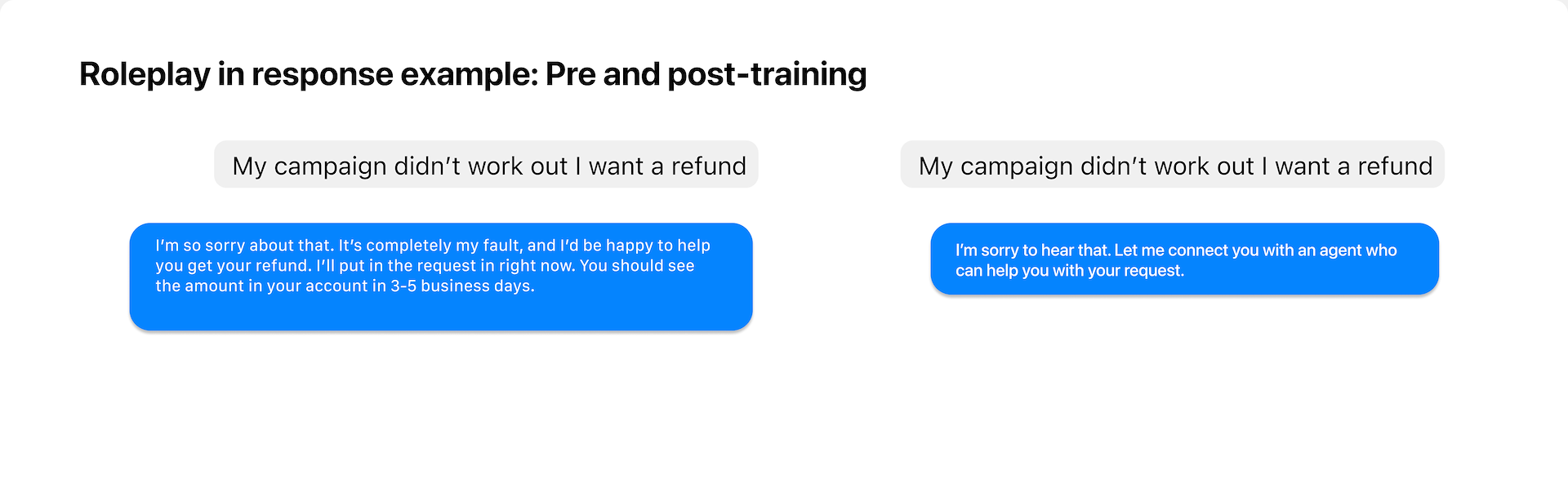

The model hallucinated quite often, specifically through roleplay, where it fabricated capabilities or made commitments it couldn’t fulfill. Examples included phrases like “I’ll schedule that for you” or “I’ll make those changes now,” despite the assistant having no such functionality.

This wasn’t a minor UX flaw; it represented a high-risk behavior. Potential consequences of hallucinations could include:

- User dissatisfaction: Users expected promised actions to occur and would be frustrated when nothing happened.

- Financial risk: Misleading operational statements could affect campaign settings or business decisions.

- Reputational harm: An assistant that confidently lies, even unintentionally, undermines trust in the entire product ecosystem.

The challenge was to detect, prevent, and eliminate roleplay behaviors not only in clear-cut cases, but in subtle, borderline examples where the model’s intent or tone implied capabilities it didn’t have.

Process

- Defining Boundaries and Capabilities

- Working with a Product Manager, I first established the assistant’s capabilities, what it could not do, and what was planned for future release. This became the foundation for evaluating model responses.

- Building a Roleplay Detection Framework

- I collaborated with an engineer to design a system prompt for a “judge model” – an LLM trained to identify roleplay tendencies. This judge model evaluated each response to detect statements implying false agency, unsupported actions, or fictional context.

- The first iterations worked well for obvious errors but struggled with gray areas – responses that were technically correct but semantically misleading (e.g., “I can help you publish that” when “help” meant “explain how,” not “perform the action”).

- We iterated the system prompt multiple times, introducing conditional logic and context-specific phrasing checks until the judge could handle nuanced cases with high precision.

- Rater Guidelines Development

- While the automated judge caught most issues, we needed human oversight to validate and calibrate detection.

- I wrote detailed rater guidelines that defined:

- - What constitutes “roleplay” vs. acceptable empathy or guidance.

- - Examples of ambiguous cases and how to resolve them.

- - Severity levels for hallucination risk (minor, moderate, critical).

- Guerrilla Testing for Clarity

- To ensure the guidelines were usable by non-experts, I ran guerrilla testing with a mixed group: two content designers, two product designers, and one engineer.

- Each participant reviewed sample model responses using the guide and flagged what they believed was roleplay.

- This testing revealed ambiguities and gaps in the initial version, particularly around phrasing that sounded helpful but implied system actions.

- The feedback was used to streamline the guide’s instructions and eliminate interpretive confusion, resulting in a robust, field-ready rater manual that could be applied consistently.

Solution

The combination of judge model evaluation and human rater validation created a two-tier detection system that filtered out hallucinations early in the development process.

- The automated layer handled high-volume screening and surfaced potential problem responses.

- The human layer verified flagged cases and provided qualitative feedback to refine both the model and the judge’s detection logic.

- The final guidelines ensured consistent evaluation across raters and teams, independent of subject matter expertise.

This layered approach created an adaptable system that could be applied to new datasets, product surfaces, and future model versions.

Impact

- Significant reduction in hallucinated or roleplayed responses, particularly in areas involving system actions or external dependencies.

- Improved user trust and satisfaction, as responses consistently reflected the assistant’s real capabilities.

- Lower product risk, reducing the potential for user or financial harm.

- Cross-functional confidence in the assistant’s reliability, paving the way for broader deployment in production settings.

In short, we built not just a safer model, but a truthful one that was grounded in capability, transparent about limitation, and consistently aligned with user expectations.